Info alert:Important Notice

Please note that more information about the previous v2 releases can be found here. You can use "Find a release" search bar to search for a particular release.

Quick Installation(v2)

disclaimer

Version 2.X represents an alpha release, exclusively accessible via the "fast" channel. Subsequent releases will transition to the "fast" channel once the new operator attains greater stability.

For installation steps of the old (version 1.X, stable), see quick installation of the 1.X version.

Pre-requisites

Below information is only appliable for Open Data Hub Operator v2.0.0 and forth release.

Installing Open Data Hub requires OpenShift Container Platform version 4.10+. All screenshots and instructions are from OpenShift 4.12. For the purposes of this quick start, we used try.openshift.com on AWS.

Tutorials will require an OpenShift cluster with a minimum of 16 CPUS and 32GB of memory across all OpenShift worker nodes.

Installing the New Open Data Hub Operator

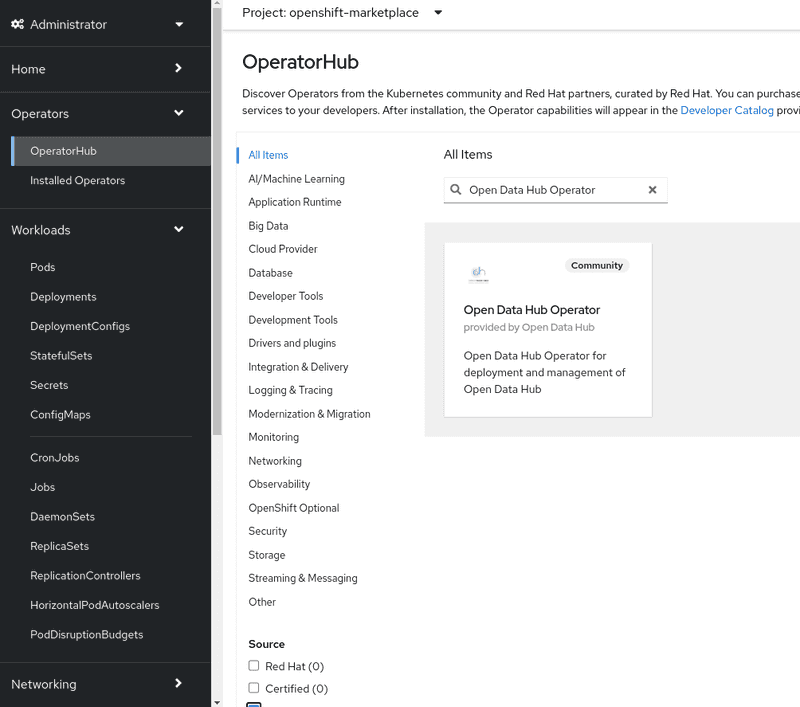

The Open Data Hub operator is available for deployment in the OpenShift OperatorHub as a Community Operator. You can install it from the OpenShift web console by following the steps below:

-

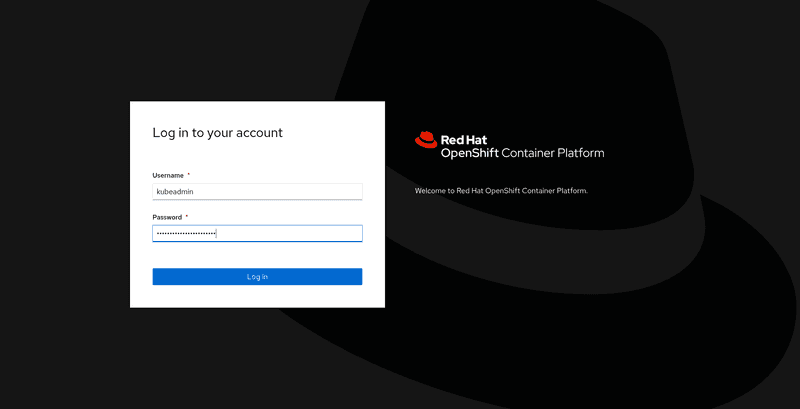

From the OpenShift web console, log in as a user with

cluster-adminprivileges. For a developer installation from try.openshift.com, thekubeadminuser will work.

-

On the lefthand bar, from

Operators->OperatorHub,- filter for

Open Data Hub Operator. - select

AI/Machine Learningand look for the icon forOpen Data Hub Operator.

- filter for

-

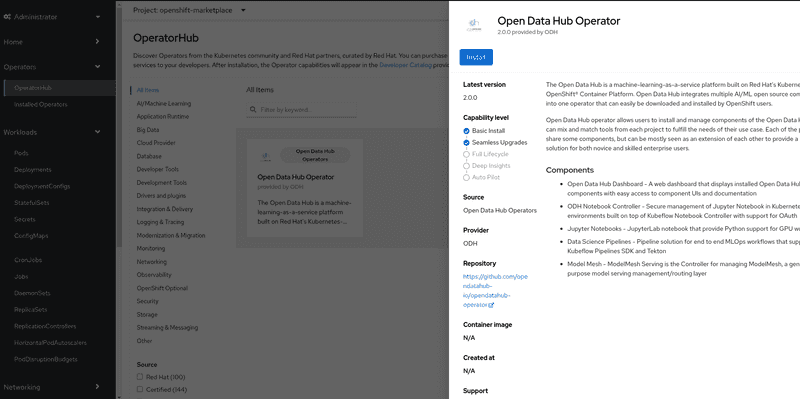

Click

Continuein the "Show community Operator" dialog if it pops out. Click theInstallbutton to install the Open Data Hub operator.

-

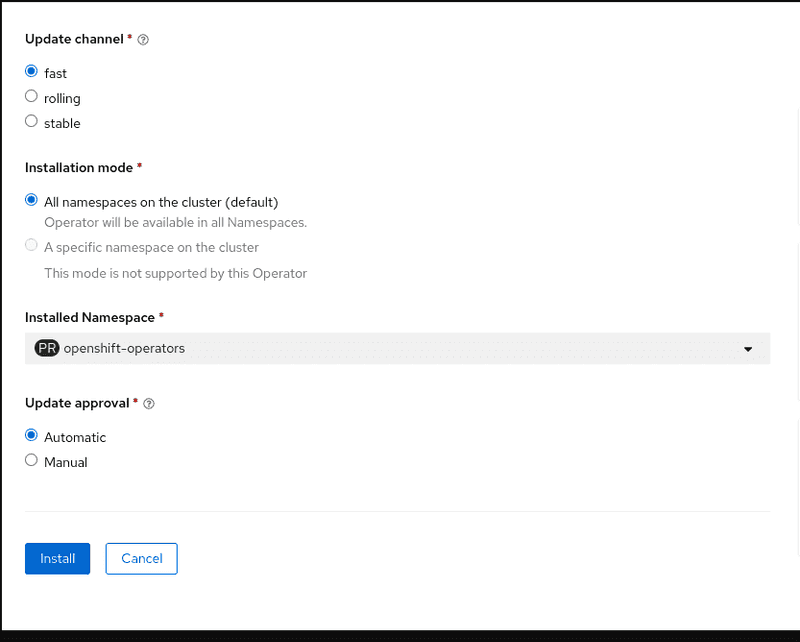

The subscription creation view will offer a few options including

Update Channel, make sure thefastchannel is selected. ClickInstallto deploy the opendatahub operator into theopenshift-operatorsnamespace.

-

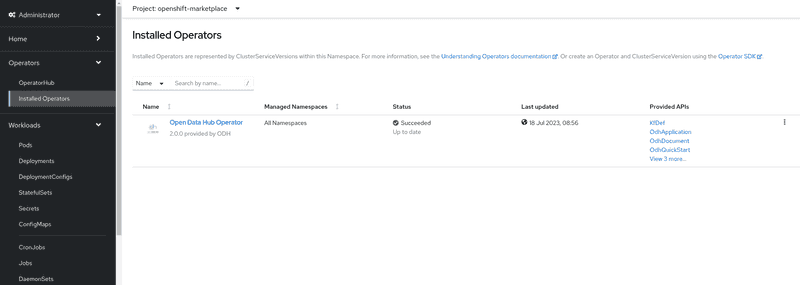

To view the status of the Open Data Hub operator installation, find the Open Data Hub Operator under

Operators->Installed Operators. It might take a couple of minutes to show, but once theStatusfield displaysSucceeded, you can proceed to create a DataScienceCluster instance

Create a DataScienceCluster instance

-

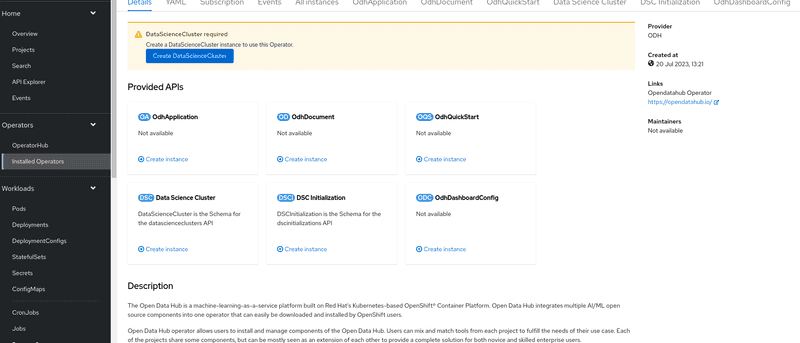

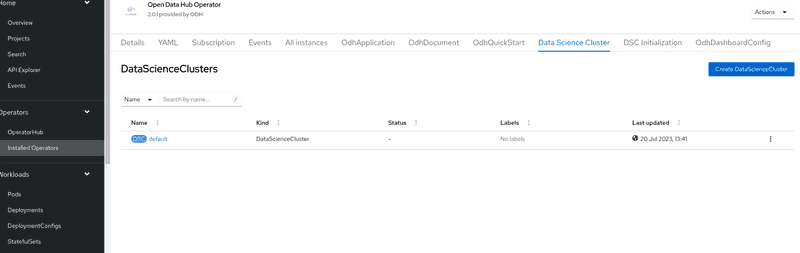

Click on the

Open Data Hub OperatorfromInstalled Operatorspage to bring up the details for the version that is currently installed.

-

Two ways to create DataScienceCluster instance:

- Click

Create DataScienceClusterbutton from the top warning dialogDataScienceCluster required(Create a DataScienceCluster instance to use this Operator.) - Click tab

Data Science Clusterthen clickCreate DataScienceClusterbutton

They both lead to a new view called "Create DataScienceCluster". By default, namespace/project

opendatahubis used to host all applications. - Click

-

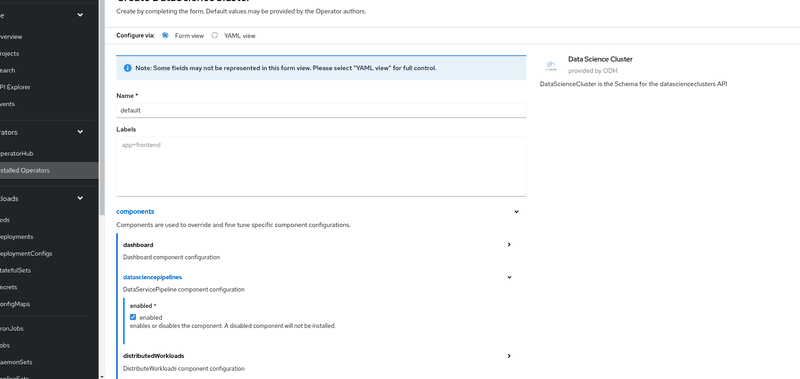

In the view of "Create DataScienceCluster", user can create DataScienceCluster instance in two ways with

componentsfields.-

Form view: a. Fill in

Namefield b. In thecomponentssection, click>to expand the list of currently supported core components. Check the set of components enabled by default and tick/untick the box in each component section to tailor the selection.

-

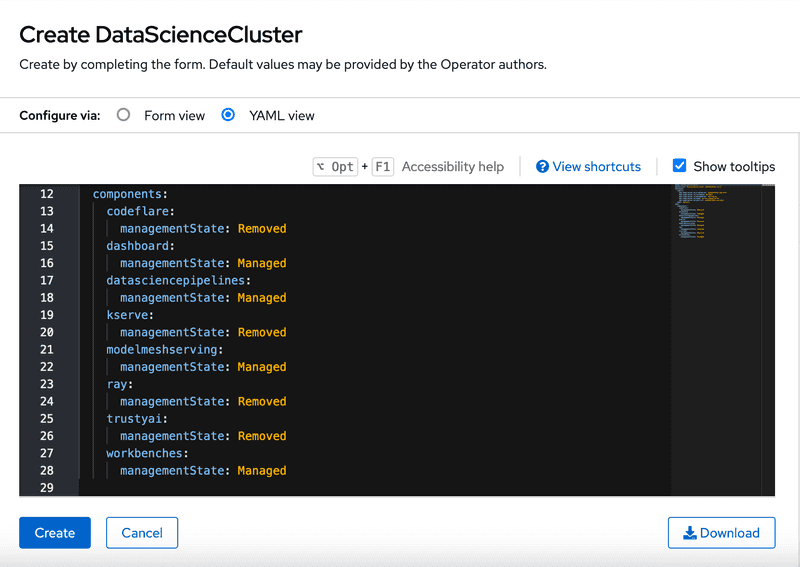

YAML view: a. View the detail schema by expanding the right-hand sidebar

b. Read ODH Core components to get the full list of supported components.

b. Read ODH Core components to get the full list of supported components.

-

-

Click

Createbutton to finalize creation process in seconds.

-

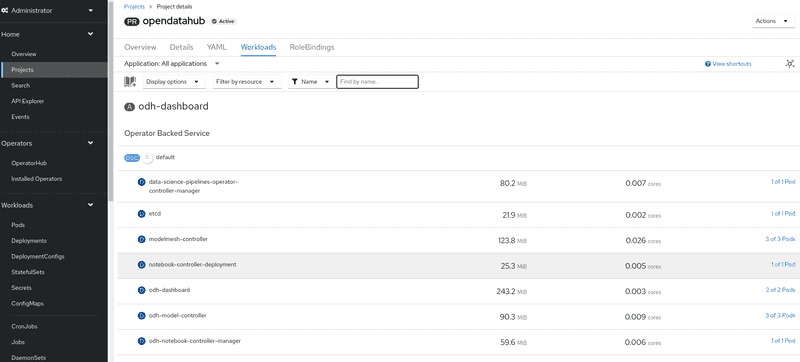

Verify the installation by viewing the project workload. Click

HomethenProjects, select "opendatahub" project, in theWorkloadstab to view enabled compoenents. These should be running.

Open Data Hub Dashboard

The data scientists and other users will most likely use the

Open Data Hub features through Open Data Hub Dashboard. Its URL can be

found in Menu > Networking > Routes, Project opendatahub,

the Location colum of the odh-dashboard route.

Dependencies

-

to use "kserve" component, users are required to install two operators via OperatorHub before enable it in DataScienceCluster CR

- Red Hat OpenShift Serverless Operator from "Red Hat" catalog.

- Red Hat OpenShift Service Mesh Operator from "Red Hat" catalog.

-

to use "datasciencepipeline" component, users are required to install one operator via OperatorHub before enable it in DataScienceCluster CR

- Red Hat OpenShift Pipelines Operator from "Red Hat" catalog.

-

to use "distributedworkloads" component, users are required to install one operator via OperatorHub before enable it in DataScienceCluster CR

- CodeFlare Operator from "Community" catalog.

-

to use "modelmesh" component, users are required to install one operator via OperatorHub before enable it in DataScienceCluster CR

- Prometheus Operator from "Community" catalog.